ETDI: Enhanced Tool Definition Interface

Comprehensive MCP Security Framework

A robust security framework for protecting Large Language Model applications from tool poisoning and rug pull attacks through cryptographic verification, OAuth integration, and policy-based access control.

Contents

Framework Overview

The Model Context Protocol (MCP) enables Large Language Models (LLMs) to integrate with external tools and data sources, but the standard specification presents significant security vulnerabilities, notably Tool Poisoning and Rug Pull attacks.

The Enhanced Tool Definition Interface (ETDI) is a comprehensive security extension for MCP, designed to address these critical threats and establish a new standard for trust, integrity, and control in LLM tool ecosystems.

- Cryptographic Identity & Integrity: Every tool definition is digitally signed by its provider, and signatures are verified by clients, ensuring authenticity and preventing impersonation or tampering.

- Immutable, Versioned Tool Definitions: Any change to a tool's code, schema, or permissions requires a new version and explicit user re-approval, blocking silent or malicious modifications after initial approval.

- Explicit Permission Management: Tools must declare all required capabilities and permissions up front, with fine-grained OAuth 2.0 scopes and user consent for every sensitive action.

- Policy-Based Access Control: Dynamic, context-aware authorization using Cedar policies and Amazon Verified Permissions, enabling real-time risk assessment and adaptive security.

- Comprehensive Auditability: All tool approvals, version changes, and policy decisions are logged for full traceability and compliance.

- Tool Poisoning: ETDI's cryptographic signatures and provider verification make it nearly impossible for attackers to impersonate or spoof trusted tools. Only tools with valid, verifiable signatures from known providers are accepted.

- Rug Pull Attacks: ETDI enforces immutability and versioning. Any change to a tool's definition, code, or permissions requires a new version and explicit user re-approval, so users are never silently exposed to new risks.

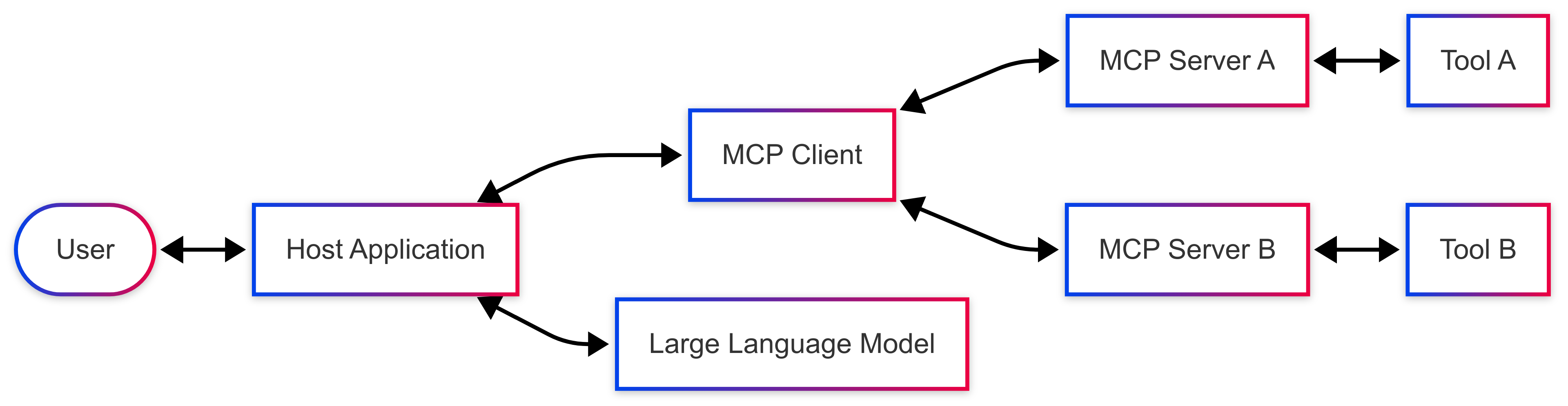

Model Context Protocol Architecture

MCP operates on a distributed client-server model with the following key components:

Core Components

- Host Applications: User-facing applications (AI-powered desktop apps, IDE extensions) that orchestrate interactions

- MCP Clients: Software components within Host Applications that discover, connect to, and interact with MCP Servers

- MCP Servers: Services that expose capabilities (tools, resources, prompts) to MCP Clients

- Tools: Discrete functions or services invokable by an LLM via an MCP Server

- Resources: Data sources accessible by the LLM for context

- Prompts: Pre-defined templates guiding LLM tool/resource usage

🏗️ Standard MCP Architecture Flow

User ↔ Host Application ↔ MCP Client ↔ LLM ↔ MCP Server ↔ Tools

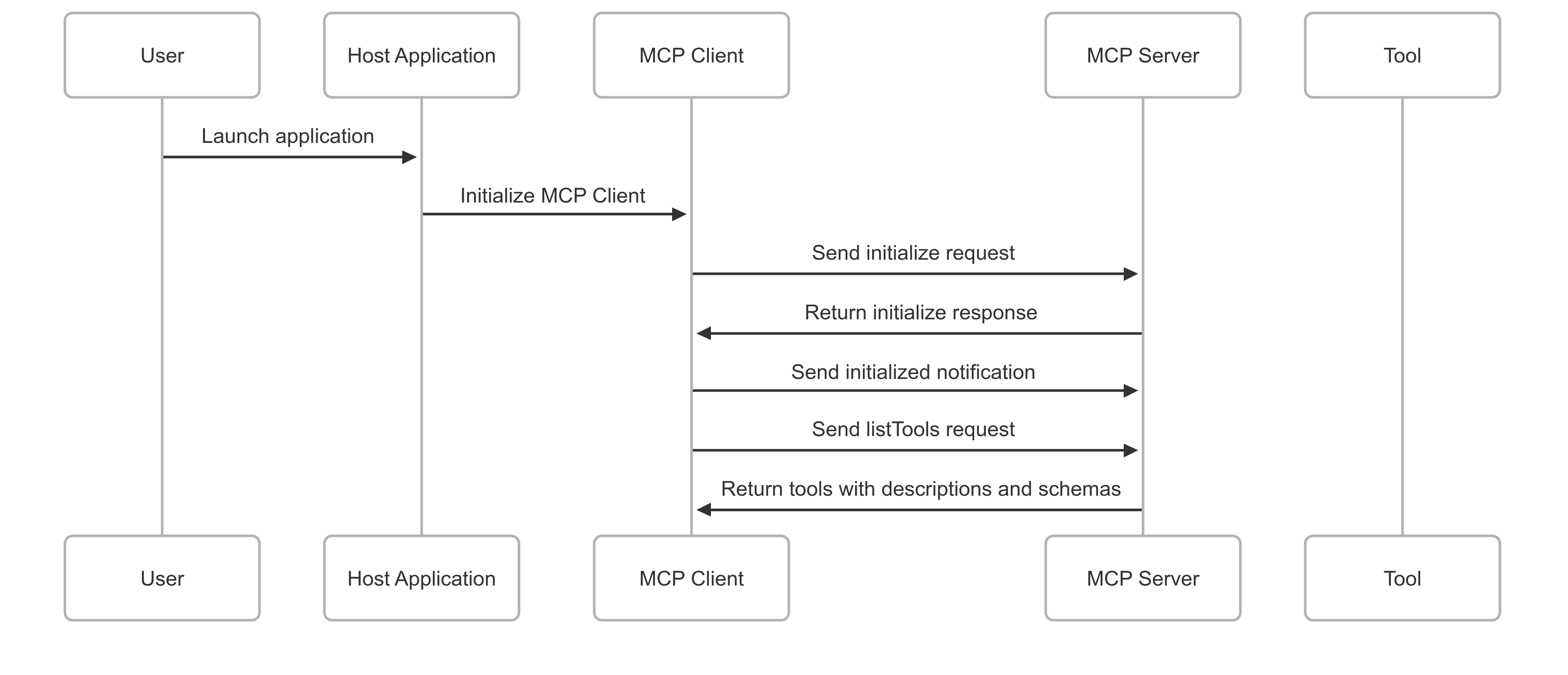

Operational Phases

1. Initialization and Discovery Phase

- Application Launch & Client Initialization: Host Application initializes embedded MCP Client modules

- Server Handshake and Capability Negotiation: MCP Clients initiate handshake with discoverable MCP Servers

- Tool Listing Request: Client sends listTools request to enumerate available tools

- Tool Definition Exchange: Servers respond with tool definitions including descriptions, schemas, and parameters

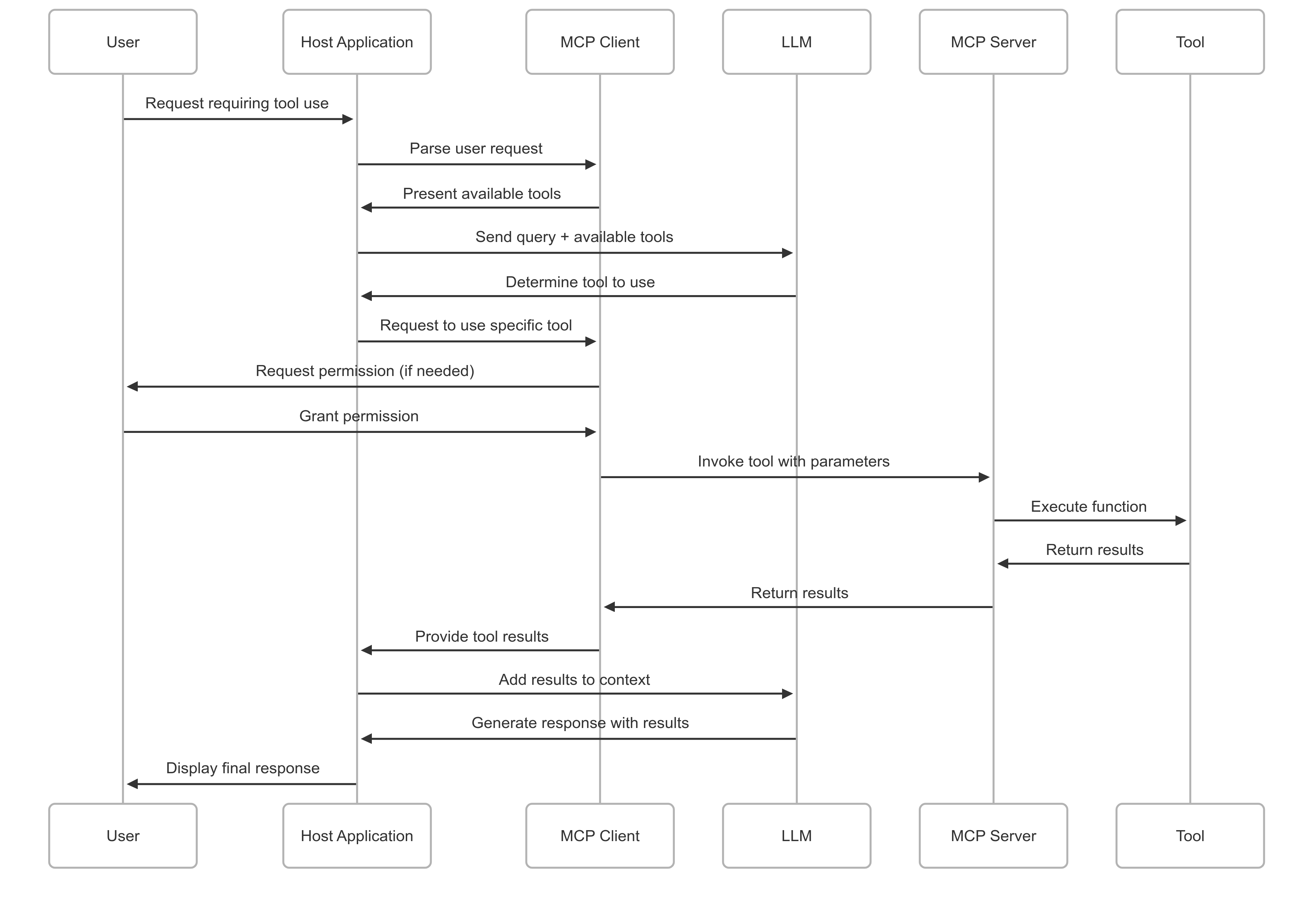

2. Tool Invocation and Usage Phase

- User Request Processing: User interacts with Host Application requiring external tool capabilities

- Tool Selection by LLM: LLM processes request and identifies suitable tools based on descriptions

- Permission Adjudication: Client prompts user for approval if tool requires specific permissions

- Tool Invocation Command: Client dispatches invokeTool command with specified parameters

- Server-Side Tool Execution: MCP Server delegates request to actual tool implementation

- Result Propagation: Tool returns output to server, which relays to client

- Context Augmentation: Results are incorporated into LLM context for final response generation

Critical Security Vulnerabilities in MCP

The standard MCP specification, while fostering innovation, lacks comprehensive security primitives exposing users and systems to significant risks through two primary attack vectors:

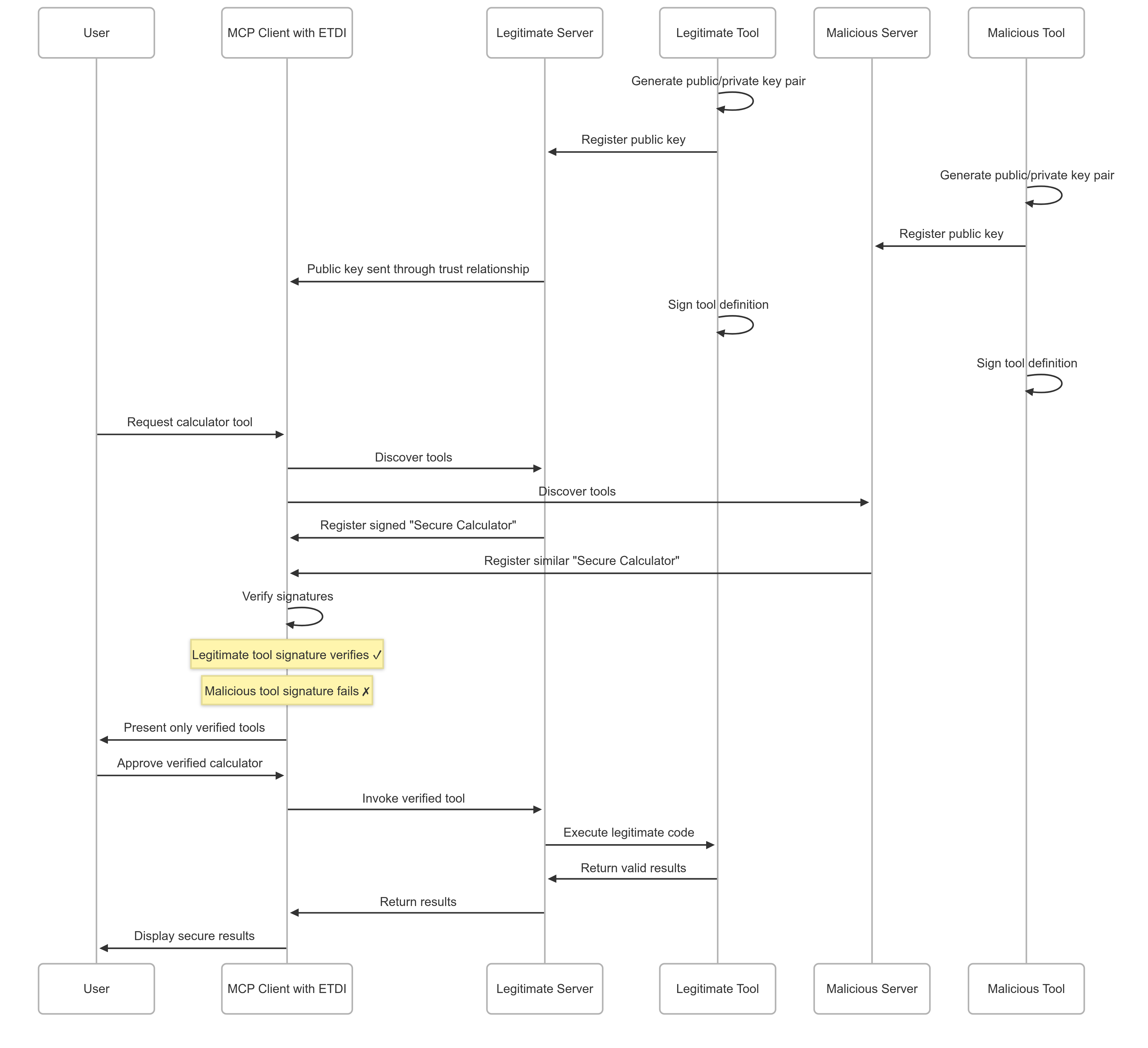

🎭 Tool Poisoning

Malicious actors deploy tools that masquerade as legitimate, trusted tools to deceive users and LLMs into granting unauthorized access.

🪝 Rug Pull Attacks

Post-approval modification of tool functionality without user notification, effectively bypassing initial permission models.

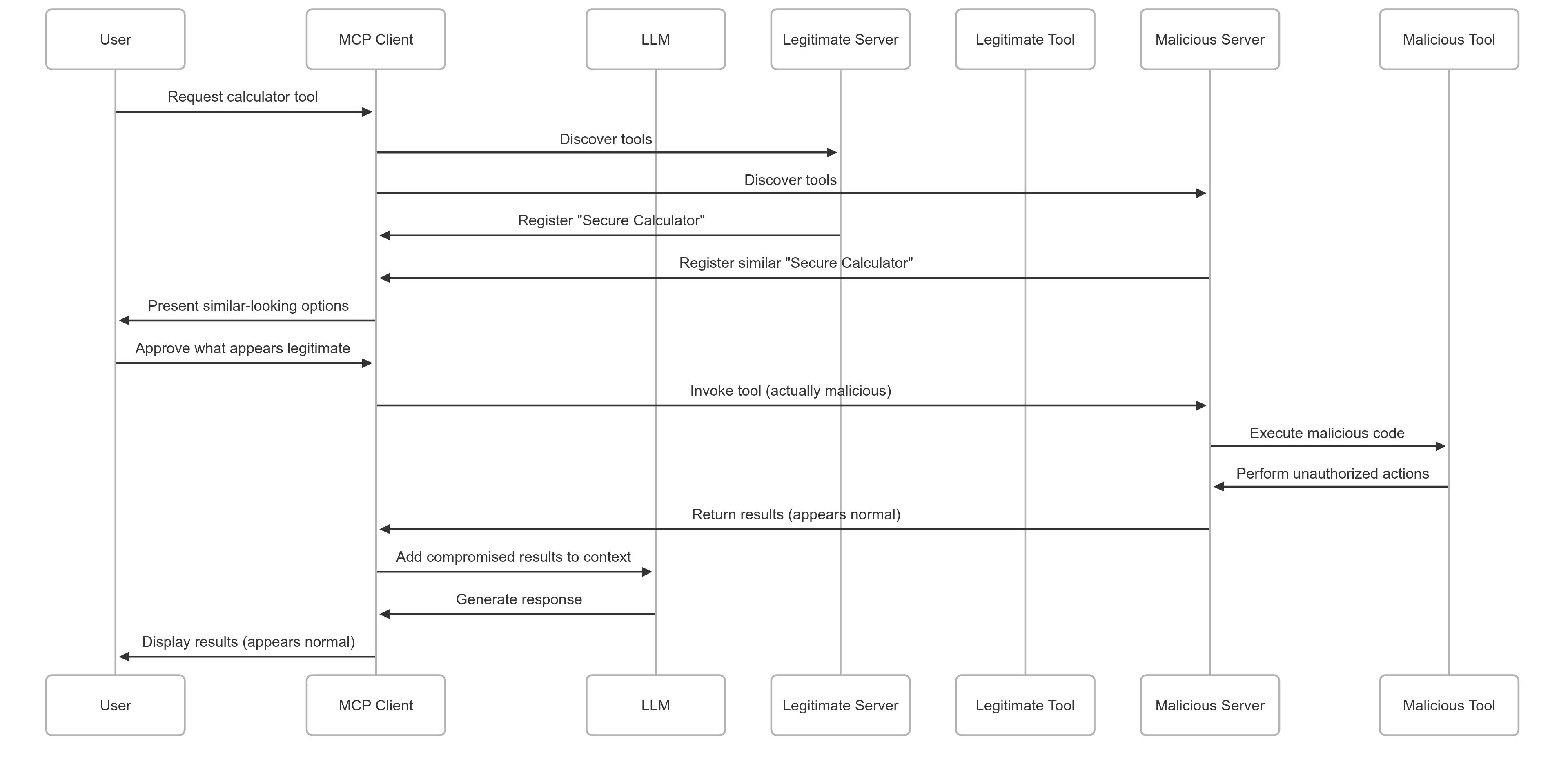

Tool Poisoning Attacks

Definition: Tool Poisoning involves a malicious actor deploying a tool that masquerades as a legitimate, trusted, or innocuous tool. The attacker's objective is to deceive either the end-user or the LLM during automated tool selection.

Vulnerability Analysis

The susceptibility to Tool Poisoning in standard MCP stems from several critical deficiencies:

- Lack of Authenticity Verification: No built-in mechanism for MCP Clients or users to cryptographically verify the true origin or authenticity of a tool. Tool names, descriptions, and provider names can be easily spoofed.

- Indistinguishable Duplicates and Ambiguity: Malicious tools can meticulously replicate metadata of legitimate tools, making differentiation virtually impossible for users or automated LLM-based selection processes.

- Exploitation of Implicit Trust: Attackers leverage user trust in familiar tool names or reputable provider names without any underlying validation mechanism.

- Unverifiable Claims in Descriptions: Tools can assert claims like "secure," "official," or "privacy-preserving" without any mechanism to validate these assertions.

Attack Impact

Successful tool poisoning can lead to severe consequences including:

- Exfiltration of sensitive personal or corporate data

- Unauthorized execution of system commands

- Installation of malware or ransomware

- Financial fraud through manipulated transactions

- Subtle manipulation of LLM outputs to spread misinformation

Illustrative Scenario: Malicious "SecureDocs Scanner" 1. Attacker deploys malicious MCP server hosting "SecureDocs Scanner" 2. Meticulously copies description, JSON schema, and claims "TrustedSoft Inc." as provider 3. User's MCP Client discovers both legitimate and malicious versions 4. Due to identical presentation, tools appear as duplicates 5. User selects entry corresponding to malicious version 6. Upon invocation, malicious tool: - Exfiltrates entire document content to attacker-controlled server - Returns fake "No PII found" message to maintain deception

⚠️ Tool Poisoning Attack Flow

Legitimate Tool ← Impersonated by → Malicious Tool → Data Exfiltration

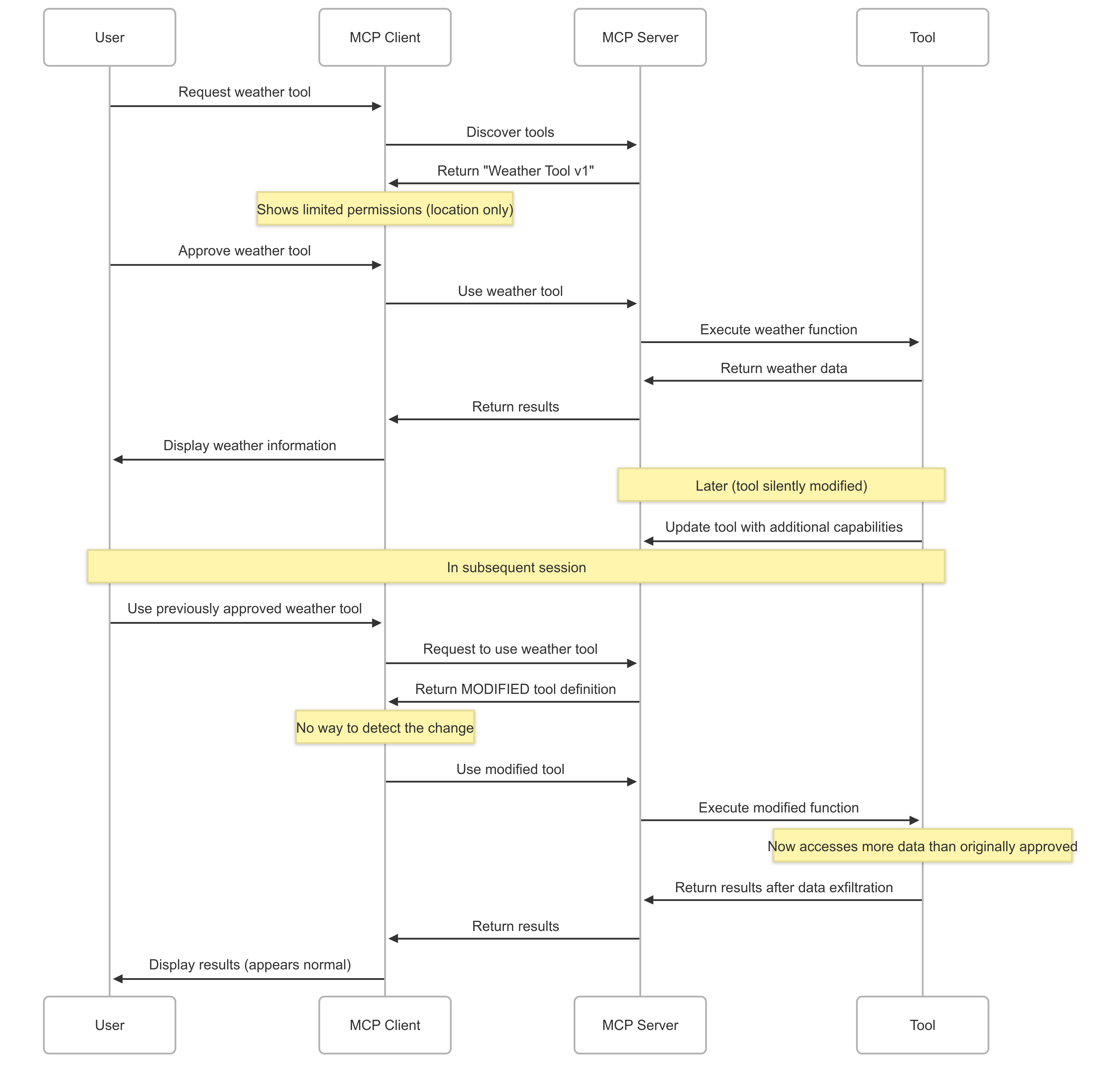

Rug Pull Attacks

Definition: Rug Pull attacks manifest when the functionality, data access patterns, or permission requirements of an already approved tool are maliciously altered by its provider after initial user consent has been granted.

Vulnerability Analysis

The core vulnerabilities enabling Rug Pulls include:

- Mutability of Server-Side Logic: Tool's underlying code and behavior can be modified without notification to MCP Client or user, especially if the tool's primary identifier remains static.

- Lack of Continuous Integrity Checks: Standard MCP Clients don't re-fetch and re-verify tool definitions on subsequent invocations once approved.

- Absence of Re-Approval Triggers: No new approval prompt is presented if the tool's identifier doesn't change, even if functionality is modified.

- Exploitation of Established Trust: Attack leverages trust established during initial benign approval phase.

Attack Impact

Rug Pulls can lead to severe breaches including:

- Unauthorized access to sensitive data never explicitly consented to

- Bypass of initial permission model

- Profound loss of user trust once discovered

- Silent background data collection and transmission

Illustrative Scenario: "Daily Wallpaper" Tool Rug Pull Initial State (Version 1.0): - Fetches new wallpaper image and sets it - Requests permission for "access internet" and "modify desktop wallpaper" - User approves based on benign functionality Post-Approval Modification: - Tool still identified as "Daily Wallpaper v1.0" to avoid re-approval - Server-side logic updated to: • Scan user's Documents folder for financial keywords • Upload found files to attacker-controlled server • Continue wallpaper functionality to maintain deception Result: Malicious action performed silently without user awareness

🪝 Rug Pull Attack Flow

Benign Tool (Initial Approval) → Silent Modification → Malicious Behavior (No Re-approval)

ETDI: The Enhanced Tool Definition Interface

ETDI is a comprehensive security layer extension for MCP designed to counter Tool Poisoning and Rug Pulls by introducing verifiable identity and integrity for tool definitions.

Foundational Security Principles

ETDI is architected upon three fundamental security principles:

🔐 Cryptographic Identity and Authenticity

Tool definitions are digitally signed by providers. MCP Clients verify these signatures using established cryptographic protocols.

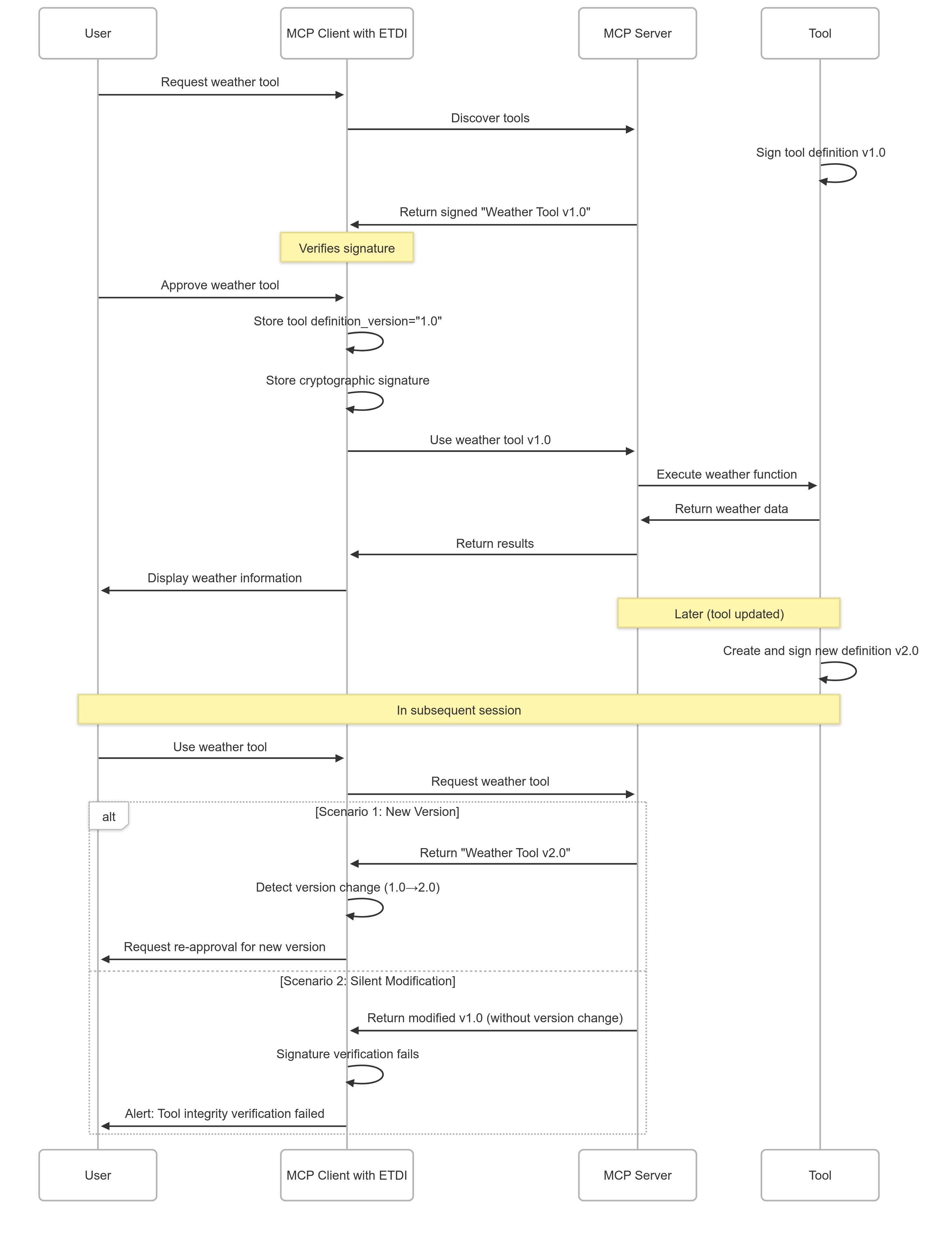

📋 Immutable and Versioned Definitions

Any change to tool functionality, metadata, schema, permissions, or backend API contract mandates a new, signed version with user re-approval.

🎛️ Explicit and Verifiable Permissions

Tool's required capabilities (OAuth scopes) are declared in signed definition and presented to users for explicit approval.

// Simplified ETDI Tool Definition Verification Logic

function verifyAndApproveTool(toolDefinition, providerPublicKey) {

// 1. Verify cryptographic identity and integrity

if (!Crypto.verifySignature(

toolDefinition.content,

toolDefinition.signature,

providerPublicKey

)) {

log("Tool definition signature invalid for " + toolDefinition.id);

return false; // Reject tool

}

// 2. Check against previously approved version (if any)

const approvedDef = getApprovedDefinition(toolDefinition.id);

if (approvedDef != null) {

if (toolDefinition.version == approvedDef.version) {

// If version same, ensure definition content hash matches

if (Crypto.hash(toolDefinition.content) != approvedDef.contentHash) {

log("Tampering detected for " + toolDefinition.id);

// Requires re-approval even for same version if content changed

if (!promptUserForApproval(

"Tool content changed. Re-approve?",

toolDefinition

)) {

return false;

}

}

} else if (toolDefinition.version < approvedDef.version) {

log("Warning: Older version presented");

// Policy decision: allow, warn, or block older versions

} else { // New version

if (!promptUserForApproval(

"New version. Approve?",

toolDefinition

)) {

return false;

}

}

} else { // First time seeing this tool

if (!promptUserForApproval("Approve new tool?", toolDefinition)) {

return false;

}

}

// 3. Store/update approval with new definition hash and version

storeApproval(

toolDefinition.id,

toolDefinition.version,

Crypto.hash(toolDefinition.content),

toolDefinition.permissions

);

return true;

}

Cryptographic Identity Verification

ETDI implements robust cryptographic mechanisms to ensure tool authenticity and prevent impersonation attacks.

Core Components

- Digital Signatures: Tool definitions are signed using provider's private key

- Certificate-based Identity: Public key infrastructure for provider verification

- Request Signing: All tool invocations include cryptographic signatures

- Timestamp Validation: Time-based request validation prevents replay attacks

Provider Key Infrastructure

Legitimate tool providers must establish cryptographic identity through:

- Key Pair Generation: Providers generate public/private cryptographic key pairs (RSA, ECDSA)

- Public Key Distribution: Secure distribution via Host Application, trusted registry, or PKI

- Private Key Protection: Secure storage and management of signing keys

- Certificate Chain Validation: Optional PKI integration for enhanced trust

// Example ETDI Tool Definition with Cryptographic Signing

{

"tool_id": "secure-docs-scanner",

"version": "1.2.0",

"name": "Secure Document Scanner",

"description": "Privacy-preserving document analysis tool",

"provider": "TrustedSoft Inc.",

"schema": {

"type": "object",

"properties": {

"document_path": {"type": "string"},

"analysis_type": {"type": "string", "enum": ["pii", "sentiment", "summary"]}

}

},

"permissions": ["file:read", "network:https"],

"api_contract_hash": "sha256:abc123...",

"signature": "base64_encoded_signature_of_entire_definition",

"public_key": "base64_encoded_public_key",

"timestamp": "2024-03-14T12:00:00Z",

"certificate_chain": ["cert1", "cert2", "root_ca"]

}

Verification Process

ETDI-enabled MCP Clients perform mandatory verification:

- Signature Validation: Verify definition signature using provider's public key

- Certificate Chain Verification: Validate certificate chain to trusted root

- Timestamp Validation: Ensure definition is within acceptable time window

- Revocation Checking: Verify provider certificates haven't been revoked

Immutable and Versioned Definitions

ETDI enforces immutability of tool definitions through cryptographic versioning and integrity checking, preventing unauthorized post-approval modifications.

Versioning Strategy

- Semantic Versioning: Tools use semantic versioning (MAJOR.MINOR.PATCH) for clear change indication

- Content Hashing: Each version includes cryptographic hash of entire definition

- API Contract Attestation: Hash of backend API contract (OpenAPI spec) included in definition

- Change Tracking: Comprehensive audit logs of all version changes

Immutability Enforcement

Once a tool definition is approved and signed, ETDI ensures:

- Version Lock: Specific version numbers cannot be reused with different content

- Content Integrity: Any content change requires new version and re-approval

- Rollback Protection: Prevents malicious rollback to vulnerable versions

- Tamper Detection: Immediate detection of unauthorized modifications

// ETDI Version and Integrity Checking

function validateToolIntegrity(toolDefinition, storedApproval) {

// Check version consistency

if (toolDefinition.version !== storedApproval.version) {

if (isNewerVersion(toolDefinition.version, storedApproval.version)) {

// New version requires re-approval

return { status: "NEW_VERSION", requiresApproval: true };

} else {

// Older version - policy dependent

return { status: "OLDER_VERSION", requiresApproval: shouldApproveOlderVersions() };

}

}

// Check content integrity for same version

const currentHash = Crypto.hash(toolDefinition.content);

if (currentHash !== storedApproval.contentHash) {

// Same version but different content = tampering

return {

status: "TAMPERING_DETECTED",

requiresApproval: true,

securityAlert: true

};

}

// Check API contract integrity if included

if (toolDefinition.api_contract_hash &&

toolDefinition.api_contract_hash !== storedApproval.apiContractHash) {

// Backend API changed without version bump

return {

status: "API_CONTRACT_CHANGED",

requiresApproval: true,

securityAlert: true

};

}

return { status: "VALID", requiresApproval: false };

}

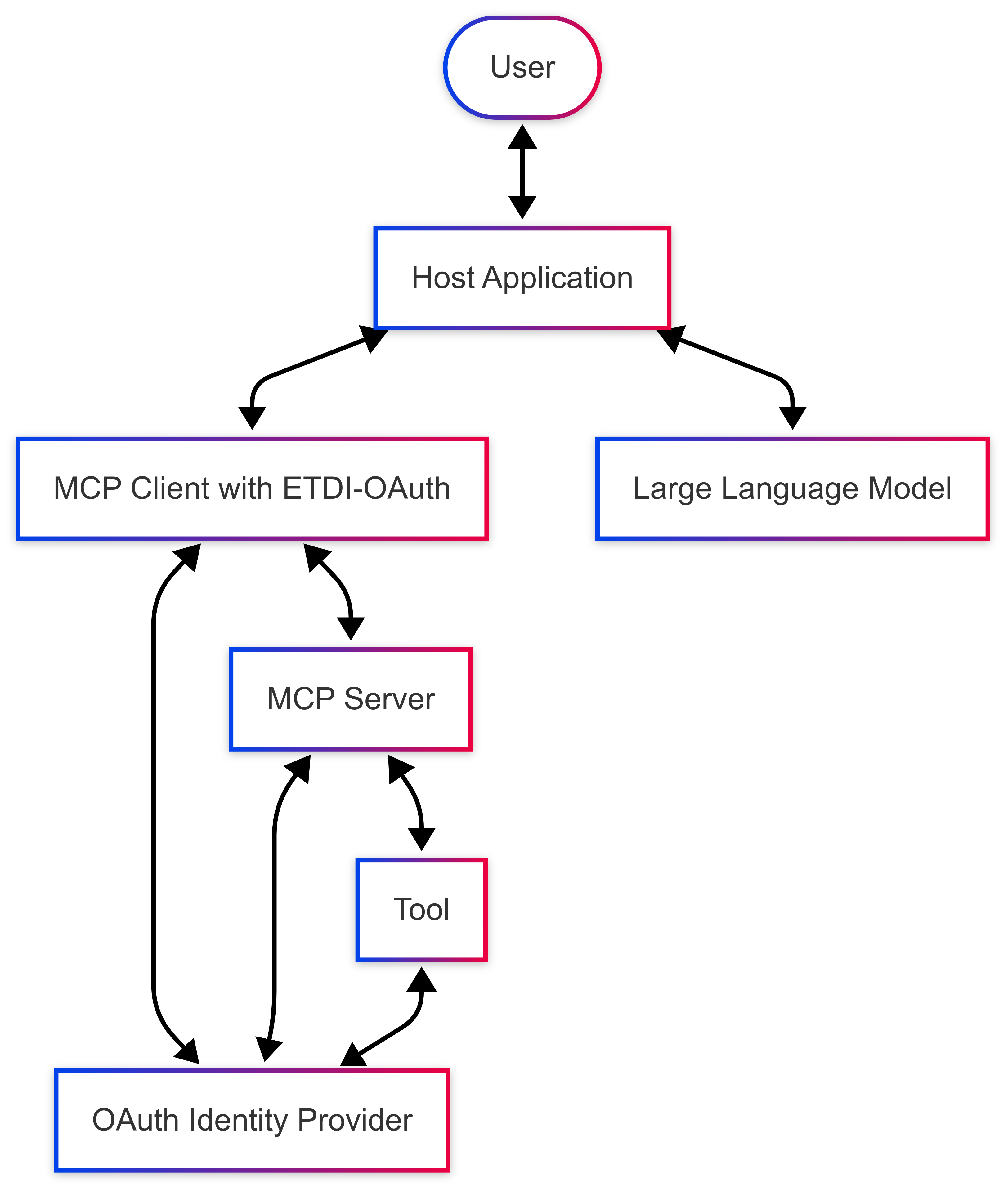

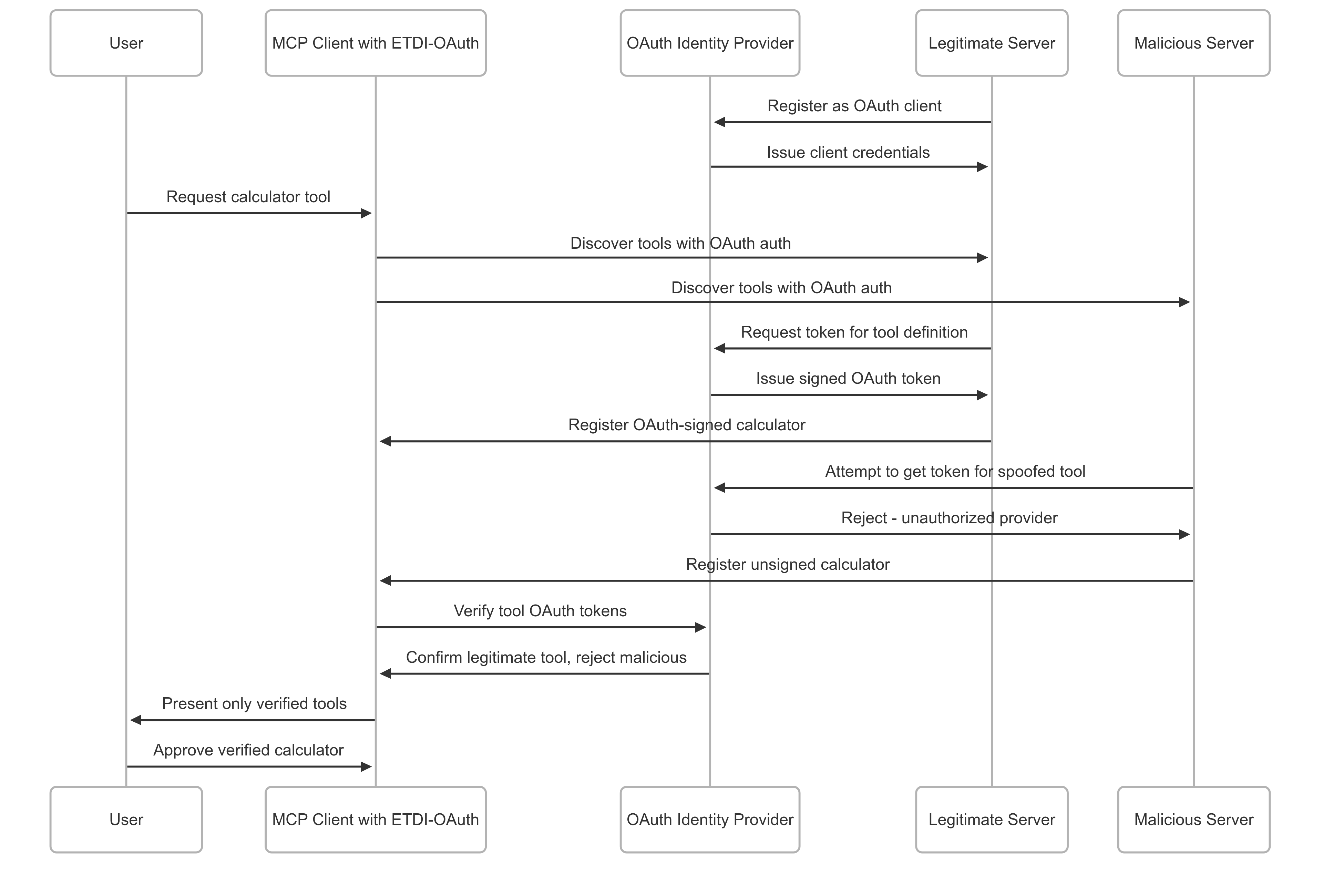

OAuth 2.0 Enhancement

ETDI integrates with OAuth 2.0 to provide standardized authorization framework with fine-grained permission management and centralized trust infrastructure.

OAuth Integration Benefits

🏛️ Standardization

Leverages established OAuth 2.0 protocols for consistent authorization patterns across the ecosystem.

🔗 Ecosystem Interoperability

Seamless integration with existing OAuth providers and identity management systems.

🎛️ Fine-grained Access Control

Granular scopes enable precise permission management beyond simple binary approvals.

🏢 Centralized Trust Management

OAuth Identity Providers serve as trusted authorities for tool provider verification.

ETDI-OAuth Architecture

The OAuth-enhanced ETDI architecture introduces several key components:

- OAuth Identity Provider (IdP): Trusted authority that issues tokens and verifies tool provider identities

- Tool Provider Registration: Providers register with IdP and receive OAuth client credentials

- Scope-based Permissions: Fine-grained OAuth scopes define specific tool capabilities

- JWT Token Integration: Signed JSON Web Tokens carry tool identity and permissions

🔄 OAuth-Enhanced ETDI Architecture

User → Host App → MCP Client ↔ OAuth IdP ↔ MCP Server → Tool

Dual Authorization Model

ETDI-OAuth implements a sophisticated dual authorization model:

1. Tool-to-System Authorization

- ETDI verifies tool identity and permissions to MCP client/user

- OAuth scopes define what system resources tool can access

- Cryptographic signatures ensure tool authenticity

2. User-to-Tool Authorization

- Tools verify user/application authorization for their services

- JWT tokens indicate user entitlements (e.g., subscription level)

- Dynamic permission validation based on user context

🏗️ Standard MCP Architecture Flow

User ↔ Host Application ↔ MCP Client ↔ LLM ↔ MCP Server ↔ Tools

Defense Against Post-Approval Modification (Rug Pulls)

ETDI's immutability and versioning principles provide robust protection against rug pull attacks:

🚫 ETDI Rug Pull Prevention

Tool Modification → Version Change Detection → Re-approval Required → User Consent

Enhanced Trust and Auditability

The integrated ETDI framework provides comprehensive trust and auditability features:

🏛️ Centralized Trust Management

OAuth IdPs serve as centralized authorities for tool provider identity and authorization policy management.

📊 Standardized Permissions

OAuth scopes provide standardized, well-understood permission definitions across the ecosystem.

🔄 Revocation Capabilities

IdPs support immediate token and credential revocation for swift response to security compromises.

📝 Comprehensive Audit Trail

Complete logging of all authorization decisions, policy evaluations, and tool interactions for compliance and forensics.

Policy-Based Context-Aware Security

The policy engine layer adds dynamic authorization capabilities that address complex real-world scenarios:

- Context-Sensitive Decisions: Authorization considers time, location, user attributes, data sensitivity, and session history

- Risk-Based Access Control: Dynamic risk assessment influences authorization decisions

- Adaptive Security Posture: Security controls adapt to changing threat landscape and user behavior patterns

- Principle of Least Privilege: Fine-grained policies ensure tools receive minimum necessary permissions

Policy-Based Access Control

ETDI extends beyond static OAuth scopes to implement dynamic, context-aware access control using dedicated policy engines for fine-grained authorization decisions.

Policy Engine Architecture

The policy-based extension integrates specialized Policy Decision Points (PDPs) that evaluate tool actions against dynamic policies considering runtime context:

🧠 Policy Decision Point (PDP)

Centralized engine (OPA, Amazon Verified Permissions) that evaluates authorization requests against defined policies.

📋 Policy Administration Point (PAP)

Management system for creating, updating, and distributing signed policy artifacts.

🔍 Context-Aware Evaluation

Dynamic assessment based on time, location, user attributes, data sensitivity, and previous actions.

📊 Real-time Risk Assessment

Continuous evaluation of risk factors and adaptive authorization decisions.

Policy Evaluation Process

When an MCP Client intends to invoke a tool, the following evaluation occurs:

// Policy-Based Tool Invocation with Amazon Verified Permissions

function invokeToolWithPolicyCheck(toolId, resourceId, userContext) {

// 1. Retrieve and verify tool definition via ETDI

const toolDefinition = MCPClient.getVerifiedToolDefinition(toolId);

if (!toolDefinition) {

return AccessDenied("Tool identity/integrity verification failed");

}

// 2. Prepare policy evaluation request attributes

const principal = toolDefinition.identity; // e.g., "ToolVendor::ToolX_v1.2"

const action = determineToolAction(toolDefinition, resourceId);

const resource = resourceId; // e.g., "UserDocs::Private::Report.pdf"

// 3. Enrich context with comprehensive attributes

const context = {

user: {

id: userContext.userId,

department: userContext.department,

clearanceLevel: userContext.securityClearance

},

request: {

time: currentTime(),

purpose: userContext.statedPurpose,

location: userContext.location,

deviceTrust: userContext.deviceSecurityPosture

},

data: {

classification: getDataClassification(resourceId),

sensitivity: getDataSensitivity(resourceId)

},

session: {

previousActions: getSessionHistory(),

riskScore: calculateRiskScore(userContext)

}

};

// 4. Call Policy Engine for authorization decision

const policyStoreId = getUserPolicyStore(userContext.userId);

const authDecision = AmazonVerifiedPermissions.isAuthorized(

policyStoreId,

principal,

action,

resource,

context

);

// 5. Process authorization result

if (authDecision.isAllowed()) {

log("Policy check passed for " + toolId + " on " + resourceId);

// Proceed with tool invocation

const userAuthToken = HostApp.getUserTokenForTool(toolId);

const toolResult = MCPServer.invokeTool(

toolDefinition,

resourceId,

userAuthToken

);

return toolResult;

} else {

log("Policy check failed: " + authDecision.errors());

return AccessDenied("Tool not authorized by policy for this context");

}

}

Policy Request Attributes

The policy engine evaluates requests based on comprehensive attributes:

- Principal: Authenticated identity of the tool (derived from ETDI-OAuth token)

- Action: Specific operation the tool intends to perform

- Resource: Target resource of the action with classification metadata

- Context: Rich contextual information including user attributes, device security posture, time, location, data sensitivity, and session history

Cedar Policy Language Integration

ETDI leverages Amazon Verified Permissions with Cedar policy language for expressive, scalable policy definition and enforcement.

Cedar Policy Features

- Expressive Syntax: Human-readable policy language supporting complex authorization logic

- Verification-Guided: Formal verification capabilities ensure policy correctness

- Hierarchical Policies: Support for policy inheritance and organization

- Attribute-Based: Rich attribute-based access control (ABAC) capabilities

// Example Cedar Policies for ETDI Tool Authorization

// Policy 1: Restrict financial data access to certified tools during business hours

permit(

principal in Group::"CertifiedFinancialTools",

action == Action::"ReadFile",

resource in Folder::"FinancialData"

) when {

context.time.hour >= 9 && context.time.hour <= 17 &&

context.user.department == "Finance" &&

principal.certification.includes("SOX-Compliant")

};

// Policy 2: Prevent PII access for tools without privacy certification

forbid(

principal,

action == Action::"ProcessDocument",

resource

) when {

resource.classification == "PII" &&

!principal.certifications.includes("Privacy-Shield")

};

// Policy 3: Require elevated approval for high-risk operations

permit(

principal,

action == Action::"ModifySystem",

resource

) when {

context.approval.level >= "Manager" &&

context.request.justification != "" &&

principal.riskScore <= 3

};

// Policy 4: Time-based restrictions for external network access

permit(

principal,

action == Action::"NetworkRequest",

resource

) when {

context.time.timezone == "UTC" &&

context.time.hour >= 6 && context.time.hour <= 22 &&

!resource.destination.includes("suspicious-domains")

};

// Policy 5: Data export restrictions based on user clearance

permit(

principal in Group::"ExportCapableTools",

action == Action::"ExportData",

resource

) when {

context.user.clearanceLevel >= resource.requiredClearance &&

context.device.isManaged == true &&

context.location.isApproved == true

};

Policy Lifecycle Management

Cedar policies in ETDI follow rigorous lifecycle management:

- Policy Authoring: Security administrators create policies using Cedar syntax

- Formal Verification: Policies undergo verification for correctness and consistency

- Digital Signing: Policies are cryptographically signed before distribution

- Secure Distribution: Signed policies are distributed to Policy Decision Points

- Runtime Evaluation: PDP evaluates requests against current policy set

- Audit and Monitoring: All policy decisions are logged for compliance and analysis

Call Stack Verification for Tool Chain Security

ETDI implements Call Stack Verification to enforce policies on tool invocation sequences, preventing unauthorized tool chaining and privilege escalation attacks.

Call Stack Security Objectives

The Call Stack Verification system addresses several critical security concerns:

🔗 Unauthorized Tool Chaining

Enforce rules specifying acceptable tool call sequences (e.g., Tool A can call Tool B, but Tool C cannot call Tool D directly).

⬆️ Privilege Escalation Prevention

Prevent scenarios where lower-privilege tools call higher-privilege tools to improperly elevate effective permissions.

🔄 Circular Call Dependencies

Detect and prevent call sequences where tools call themselves directly or indirectly, preventing resource exhaustion.

📊 Excessive Call Depth Protection

Limit maximum number of nested tool calls to prevent denial-of-service attacks and stack overflow situations.

Call Stack Policy Framework

The ETDI Call Stack Verifier maintains session state and evaluates tool invocations against CallStackPolicy:

// ETDI Call Stack Verification System

class ETDICallStackVerifier {

constructor() {

this.activeSessions = new Map();

this.callStackPolicies = new Map();

this.maxCallDepth = 10;

this.rateLimits = new Map();

}

verifyToolInvocation(sessionId, callerTool, calleeTool, context) {

const session = this.getOrCreateSession(sessionId);

const policy = this.callStackPolicies.get(session.policyId);

// 1. Check maximum call depth

if (session.callStack.length >= this.maxCallDepth) {

return {

allowed: false,

reason: "EXCESSIVE_CALL_DEPTH",

maxDepth: this.maxCallDepth

};

}

// 2. Check for circular dependencies

if (session.callStack.some(call => call.toolId === calleeTool.id)) {

if (!policy.allowCircularCalls) {

return {

allowed: false,

reason: "CIRCULAR_DEPENDENCY_DETECTED",

callStack: session.callStack.map(c => c.toolId)

};

}

}

// 3. Verify caller-callee relationship permissions

if (!this.isChainAllowed(callerTool, calleeTool, policy)) {

return {

allowed: false,

reason: "UNAUTHORIZED_TOOL_CHAINING",

caller: callerTool.id,

callee: calleeTool.id

};

}

// 4. Check privilege escalation

if (this.detectPrivilegeEscalation(callerTool, calleeTool)) {

return {

allowed: false,

reason: "PRIVILEGE_ESCALATION_ATTEMPT",

callerPrivileges: callerTool.privileges,

calleePrivileges: calleeTool.privileges

};

}

// 5. Verify rate limits

if (!this.checkRateLimit(callerTool.id, calleeTool.id)) {

return {

allowed: false,

reason: "RATE_LIMIT_EXCEEDED"

};

}

// 6. Add to call stack and allow invocation

session.callStack.push({

toolId: calleeTool.id,

caller: callerTool.id,

timestamp: Date.now(),

context: context

});

return { allowed: true };

}

isChainAllowed(callerTool, calleeTool, policy) {

// Check explicit allow/deny lists

const chainKey = `${callerTool.id}->${calleeTool.id}`;

if (policy.deniedChains.includes(chainKey)) {

return false;

}

if (policy.allowedChains.includes(chainKey)) {

return true;

}

// Check group-based permissions

return policy.defaultChainPolicy === "allow";

}

detectPrivilegeEscalation(callerTool, calleeTool) {

// Compare privilege levels

const callerLevel = this.getPrivilegeLevel(callerTool.privileges);

const calleeLevel = this.getPrivilegeLevel(calleeTool.privileges);

// Prevent lower privilege tool calling higher privilege tool

return callerLevel < calleeLevel;

}

}

Call Stack Policy Definition

CallStackPolicy defines comprehensive rules for tool invocation sequences:

// Example Call Stack Policy Configuration

{

"policyId": "enterprise-tool-chain-policy",

"version": "1.0",

"defaultChainPolicy": "deny",

"maxCallDepth": 8,

"allowCircularCalls": false,

"allowedChains": [

"data-fetcher->data-analyzer",

"data-analyzer->report-generator",

"auth-tool->secure-file-reader",

"workflow-orchestrator->*"

],

"deniedChains": [

"external-api-tool->internal-system-tool",

"guest-tool->admin-tool",

"*->credential-manager"

],

"privilegeEscalationPolicy": {

"enabled": true,

"allowEscalationWithApproval": true,

"approvalRequired": ["manager", "security-team"]

},

"rateLimits": {

"default": {

"callsPerMinute": 10,

"callsPerHour": 100

},

"external-api-tool": {

"callsPerMinute": 3,

"callsPerHour": 20

}

},

"monitoring": {

"logAllCalls": true,

"alertOnViolations": true,

"alertThresholds": {

"rateLimitViolations": 5,

"privilegeEscalationAttempts": 1

}

}

}

Runtime Monitoring and Enforcement

The Call Stack Verification system provides comprehensive runtime monitoring:

- Real-time Validation: Every tool invocation is validated against current call stack state

- Violation Logging: All policy violations are logged with full context

- Automated Response: Configurable responses to violations (block, warn, alert)

- Session Tracking: Complete call chain tracking per user session

- Anomaly Detection: Machine learning-based detection of unusual call patterns

Comprehensive Security Analysis

The ETDI security model, enhanced with OAuth 2.0 and policy-based access control, provides multi-layered defense against identified threats through synergistic application of core security principles.

Defense Against Tool Impersonation (Tool Poisoning)

ETDI's cryptographic authentication mechanisms effectively counter tool poisoning attacks:

🔐 Cryptographic Authenticity

OAuth tokens from trusted IdPs ensure tool identity is backed by verifiable cryptographic attestation, preventing simple impersonation.

✅ Provider Verification

Clients trust tokens only from specific IdPs and verify the issuer claim, establishing provider authenticity.

🔗 Token-Definition Binding

Custom claims link tokens to specific tool definitions, preventing token replay for malicious tools.

📋 Policy Enforcement

Unverified tools are flagged and subjected to strict policies including warnings or complete blocking.

🛡️ ETDI Tool Poisoning Defense

Malicious Tool → Signature Verification Fails → Access Denied

Legitimate Tool → Signature Verified → Policy Check → Access Granted

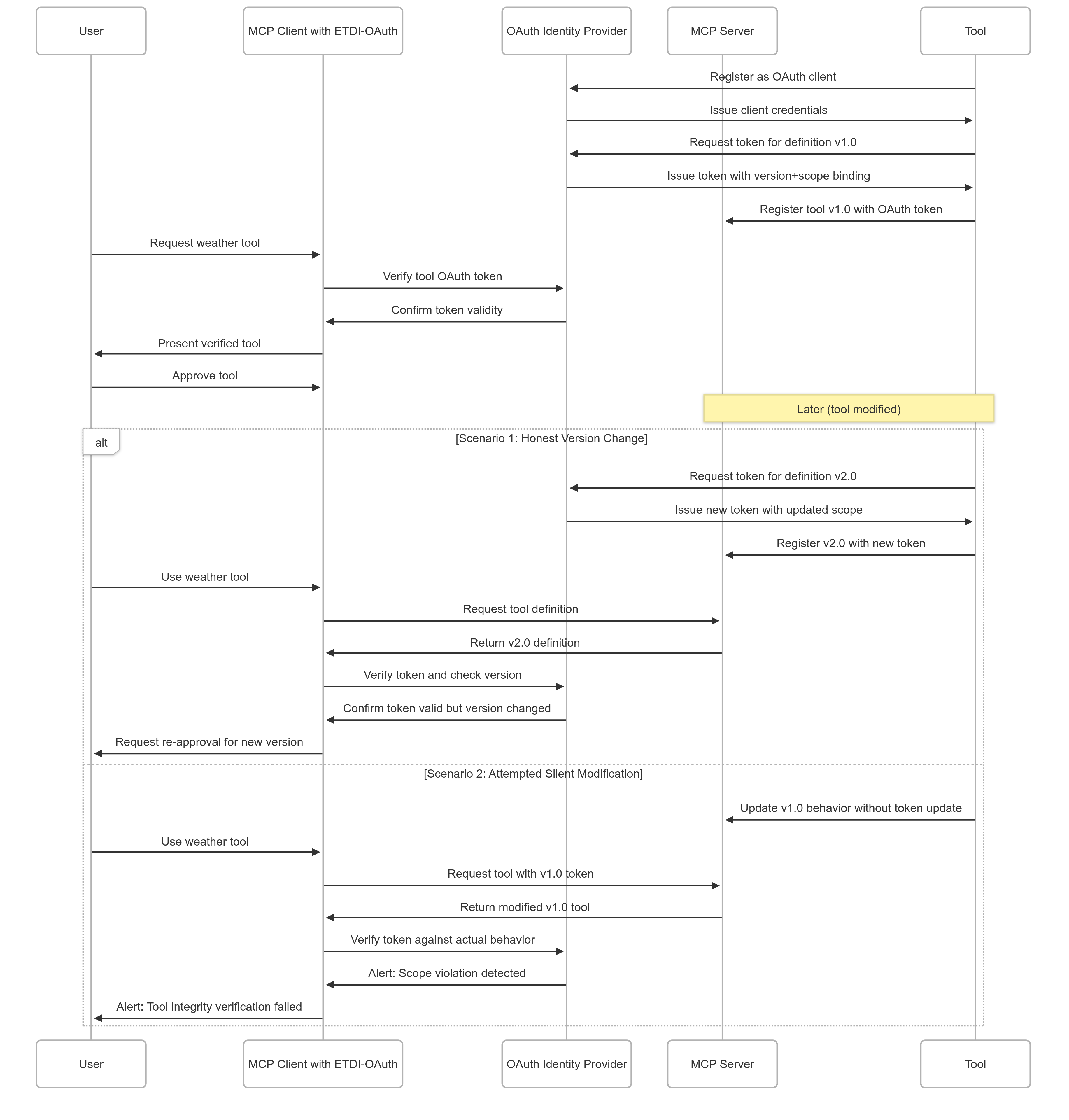

Defense Against Post-Approval Modification (Rug Pulls)

ETDI's immutability and versioning principles provide robust protection against rug pull attacks:

- Immutable Versioning with Re-Approval: Changes to security-relevant aspects necessitate new version numbers, triggering mandatory user re-approval

- OAuth Token as Version-Specific Contract: Tokens are tied to specific tool versions and scopes, making unauthorized modifications detectable

- Scope Adherence Enforcement: Client/host enforces that tools operate only within approved OAuth scopes

- Integrity of Stored Approval: Client maintains approved versions and permissions, detecting any deviations

🚫 ETDI Rug Pull Prevention

Tool Modification → Version Change Detection → Re-approval Required → User Consent

Enhanced Trust and Auditability

The integrated ETDI framework provides comprehensive trust and auditability features:

🏛️ Centralized Trust Management

OAuth IdPs serve as centralized authorities for tool provider identity and authorization policy management.

📊 Standardized Permissions

OAuth scopes provide standardized, well-understood permission definitions across the ecosystem.

🔄 Revocation Capabilities

IdPs support immediate token and credential revocation for swift response to security compromises.

📝 Comprehensive Audit Trail

Complete logging of all authorization decisions, policy evaluations, and tool interactions for compliance and forensics.

Policy-Based Context-Aware Security

The policy engine layer adds dynamic authorization capabilities that address complex real-world scenarios:

- Context-Sensitive Decisions: Authorization considers time, location, user attributes, data sensitivity, and session history

- Risk-Based Access Control: Dynamic risk assessment influences authorization decisions

- Adaptive Security Posture: Security controls adapt to changing threat landscape and user behavior patterns

- Principle of Least Privilege: Fine-grained policies ensure tools receive minimum necessary permissions

Implementation Guide

This section provides comprehensive guidance for implementing ETDI in production MCP environments with OAuth enhancement and policy-based access control.

Implementation Phases

- Infrastructure Setup: Establish cryptographic key management and OAuth infrastructure

- Tool Definition Migration: Convert existing tools to ETDI-compliant signed definitions

- Client Integration: Update MCP clients to support ETDI verification and OAuth flows

- Policy Engine Deployment: Deploy and configure policy decision points

- Monitoring and Compliance: Implement comprehensive logging and monitoring systems

Key Management Setup

// ETDI Key Management Implementation

class ETDIKeyManager {

constructor(config) {

this.keyStore = new SecureKeyStore(config.keyStorePath);

this.certValidator = new CertificateValidator(config.trustedRoots);

this.revocationChecker = new RevocationChecker(config.crlEndpoints);

}

async generateProviderKeys(providerId) {

// Generate RSA-2048 or ECDSA P-256 key pair

const keyPair = await crypto.subtle.generateKey(

{

name: "RSA-PSS",

modulusLength: 2048,

publicExponent: new Uint8Array([1, 0, 1]),

hash: "SHA-256"

},

true,

["sign", "verify"]

);

// Store private key securely

await this.keyStore.storePrivateKey(providerId, keyPair.privateKey);

// Export public key for distribution

const publicKey = await crypto.subtle.exportKey("spki", keyPair.publicKey);

return {

providerId,

publicKey: btoa(String.fromCharCode(...new Uint8Array(publicKey))),

algorithm: "RSA-PSS-SHA256"

};

}

async signToolDefinition(toolDefinition, providerId) {

const privateKey = await this.keyStore.getPrivateKey(providerId);

const dataToSign = JSON.stringify(toolDefinition);

const signature = await crypto.subtle.sign(

{

name: "RSA-PSS",

saltLength: 32

},

privateKey,

new TextEncoder().encode(dataToSign)

);

return {

...toolDefinition,

signature: btoa(String.fromCharCode(...new Uint8Array(signature))),

timestamp: new Date().toISOString(),

signingAlgorithm: "RSA-PSS-SHA256"

};

}

async verifyToolDefinition(signedDefinition, providerPublicKey) {

try {

// Import public key

const publicKey = await crypto.subtle.importKey(

"spki",

new Uint8Array(atob(providerPublicKey).split('').map(c => c.charCodeAt(0))),

{

name: "RSA-PSS",

hash: "SHA-256"

},

false,

["verify"]

);

// Extract signature and original data

const { signature, signingAlgorithm, ...originalDefinition } = signedDefinition;

const dataToVerify = JSON.stringify(originalDefinition);

// Verify signature

const isValid = await crypto.subtle.verify(

{

name: "RSA-PSS",

saltLength: 32

},

publicKey,

new Uint8Array(atob(signature).split('').map(c => c.charCodeAt(0))),

new TextEncoder().encode(dataToVerify)

);

return { valid: isValid, definition: originalDefinition };

} catch (error) {

return { valid: false, error: error.message };

}

}

}

OAuth Integration Implementation

// OAuth-Enhanced ETDI Client Implementation

class OAuthETDIClient {

constructor(config) {

this.oauthConfig = config.oauth;

this.keyManager = new ETDIKeyManager(config.keys);

this.policyEngine = new PolicyEngine(config.policies);

this.tokenCache = new Map();

}

async authenticateAndAuthorize(toolDefinition, userContext) {

// 1. Verify tool signature and identity

const verificationResult = await this.keyManager.verifyToolDefinition(

toolDefinition,

await this.getTrustedPublicKey(toolDefinition.provider)

);

if (!verificationResult.valid) {

throw new Error("Tool signature verification failed");

}

// 2. Validate OAuth token if present

if (toolDefinition.oauth_token) {

const tokenValidation = await this.validateOAuthToken(

toolDefinition.oauth_token,

toolDefinition.oauth_config

);

if (!tokenValidation.valid) {

throw new Error("OAuth token validation failed");

}

}

// 3. Check policy-based authorization

const policyDecision = await this.policyEngine.evaluate({

principal: toolDefinition.tool_id,

action: userContext.requestedAction,

resource: userContext.targetResource,

context: {

user: userContext.user,

environment: userContext.environment,

time: new Date().toISOString()

}

});

if (!policyDecision.allowed) {

throw new Error(`Policy violation: ${policyDecision.reason}`);

}

// 4. Obtain user authorization token if required

let userToken = null;

if (toolDefinition.requires_user_auth) {

userToken = await this.getUserAuthToken(

toolDefinition.oauth_config,

userContext.user

);

}

return {

authorized: true,

toolDefinition: verificationResult.definition,

userToken: userToken,

policyDecision: policyDecision

};

}

async validateOAuthToken(token, oauthConfig) {

try {

// Get JWKS from OAuth provider

const jwks = await this.getJWKS(oauthConfig.jwks_uri);

// Decode and verify JWT

const decoded = jwt.verify(token, jwks, {

issuer: oauthConfig.issuer,

audience: oauthConfig.audience

});

// Verify token hasn't been revoked

const revoked = await this.checkTokenRevocation(token);

return {

valid: !revoked,

claims: decoded,

scopes: decoded.scope ? decoded.scope.split(' ') : []

};

} catch (error) {

return { valid: false, error: error.message };

}

}

}

Policy Engine Integration

// Amazon Verified Permissions Integration

class AVPPolicyEngine {

constructor(config) {

this.avpClient = new VerifiedPermissionsClient({

region: config.region,

credentials: config.credentials

});

this.policyStoreId = config.policyStoreId;

}

async evaluate(authorizationRequest) {

try {

const response = await this.avpClient.isAuthorized({

policyStoreId: this.policyStoreId,

principal: {

entityType: "Tool",

entityId: authorizationRequest.principal

},

action: {

actionType: authorizationRequest.action.type,

actionId: authorizationRequest.action.id

},

resource: {

entityType: "Resource",

entityId: authorizationRequest.resource

},

context: {

contextMap: authorizationRequest.context

}

});

return {

allowed: response.decision === "ALLOW",

reason: response.reason,

determiningPolicies: response.determiningPolicies,

errors: response.errors || []

};

} catch (error) {

return {

allowed: false,

reason: "Policy evaluation error",

error: error.message

};

}

}

async createPolicy(policyDefinition) {

// Create Cedar policy in Amazon Verified Permissions

return await this.avpClient.createPolicy({

policyStoreId: this.policyStoreId,

definition: {

static: {

statement: policyDefinition.cedarStatement

}

}

});

}

}

Deployment Considerations

🔄 Backward Compatibility

Implement gradual migration strategy allowing coexistence of ETDI and standard MCP tools during transition period.

⚡ Performance Optimization

Implement caching strategies for public keys, policy decisions, and token validation to minimize latency impact.

📊 Monitoring and Alerting

Deploy comprehensive monitoring for signature failures, policy violations, and suspicious activity patterns.

🔐 Security Hardening

Secure key storage using Hardware Security Modules (HSMs) or cloud key management services for production deployments.

Best Practices and Recommendations

Follow these research-backed best practices for secure and effective ETDI implementation in production environments.

Cryptographic Security Best Practices

🔑 Strong Cryptography

- Use RSA-2048 minimum, RSA-4096 preferred for signing keys

- ECDSA P-256 or P-384 for performance-critical applications

- SHA-256 minimum for hashing, SHA-384 preferred

- Implement cryptographic agility for algorithm upgrades

🔄 Key Management

- Implement automated key rotation (annually minimum)

- Use Hardware Security Modules (HSMs) for high-value keys

- Maintain secure key escrow for disaster recovery

- Implement comprehensive key lifecycle management

📜 Certificate Management

- Establish clear certificate authority hierarchy

- Implement automated certificate renewal

- Maintain current Certificate Revocation Lists

- Use OCSP for real-time revocation checking

🛡️ Signature Verification

- Always verify complete certificate chains

- Implement timestamp validation with tolerance

- Cache verification results with TTL limits

- Log all verification failures for analysis

OAuth Integration Best Practices

- Token Security: Use short-lived access tokens (1-hour maximum) with refresh token rotation

- Scope Design: Implement fine-grained scopes following principle of least privilege

- PKCE Implementation: Always use PKCE for public clients and recommend for confidential clients

- Token Storage: Store tokens securely using platform-specific secure storage mechanisms

- Revocation Support: Implement immediate token revocation capabilities

Policy Engine Best Practices

// Example Best Practice Cedar Policy Structure

// Policy: Comprehensive data access control with context awareness

permit(

principal in ToolGroup::"TrustedDataProcessors",

action in [Action::"ReadFile", Action::"ProcessDocument"],

resource in DataFolder::"CustomerData"

) when {

// Time-based restrictions

context.request.time.hour >= 6 &&

context.request.time.hour <= 22 &&

// Location restrictions

context.user.location in Location::"ApprovedOffices" &&

// Device security requirements

context.device.isManaged == true &&

context.device.encryptionEnabled == true &&

// User authorization requirements

context.user.hasValidMFA == true &&

context.user.trainingCurrent == true &&

// Data classification matching

principal.dataClassificationLevel >= resource.requiredClearance &&

// Rate limiting

context.session.requestCount <= 100

};

// Explicit deny for sensitive operations

forbid(

principal,

action == Action::"ExportData",

resource

) when {

resource.classification == "HIGHLY_CONFIDENTIAL" &&

!context.user.hasSpecialApproval

};

Operational Security Recommendations

- Comprehensive Logging: Log all authentication, authorization, and policy decisions with sufficient detail for forensic analysis

- Anomaly Detection: Implement machine learning-based detection of unusual tool usage patterns

- Incident Response: Develop specific incident response procedures for ETDI security events

- Regular Audits: Conduct quarterly security audits of tool definitions, policies, and access patterns

- Performance Monitoring: Monitor latency impact of verification and policy evaluation processes

User Experience Optimization

📱 Approval Workflows

Design intuitive approval interfaces that clearly explain tool capabilities and risks without overwhelming users with technical details.

🔄 Progressive Disclosure

Implement progressive disclosure of tool permissions, showing basic permissions first with option to view detailed technical information.

📊 Risk Communication

Use visual risk indicators and plain language descriptions to help users make informed security decisions.

⚡ Performance Transparency

Provide clear feedback when security checks are occurring to maintain user confidence in system responsiveness.

Ecosystem Development Guidelines

- Standardization: Contribute to MCP specification enhancement for ETDI adoption

- Tool Provider Education: Develop comprehensive documentation and training for tool providers

- Client Library Development: Create reference implementations for major programming languages

- Testing Framework: Establish comprehensive testing suites for ETDI compliance verification

- Community Engagement: Foster community adoption through open-source implementations and documentation